I wanted to share a recurring WebSphere bug that I noticed over a year ago because although it was irritating then, if it occurs now it can actually prevent you from deploying Connections external users the way you want.

Here’s the scenario (and it’s fairly common for me).

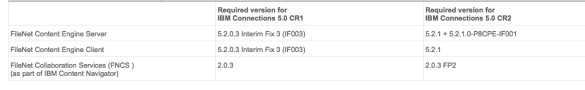

IBM Connections 5 CR2 on WebSphere 8.5.5 FP3

Primary LDAP is a Domino server

Secondary LDAP for external users is a separate Domino server in an isolated domain

When we want external users to access our Connections environment, the most secure approach is to have a dedicated LDAP server or branch for external users to appear in. Especially if (as we do) you have a self registration / password reset process for those users. The problem occurs because we want to use Domino as our LDAP. LDAP servers other than Domino are built with hierarchical entries so on the WebSphere configuration screen where we are asked for the “unique distinguished name of base entries” that’s very easy, we just select the top level of the hierarchy. Unfortunately in Domino LDAP we don’t always have a hierarchy – we have flat names and we have flat groups so if we try and use a O= xx value – those names and groups aren’t picked up.

We used to use C=US which would trick WebSphere into querying a level above O= and that would work but since WebSphere 7.0.0.23 we have been using the word “root” which validates both flat names and all hierarchies on the server.

So far so great.

Now we want to add another LDAP server which will be a Domino server where people will register. We’ll have two TDI processes one connecting to the internal Domino server for internal users and another to the external Domino server for external user access. It’s Domino so we want to use “root” as our base entry but since WebSphere requires all federated repositories to have unique base entries and since we already use “root” for our internal server, I have to fake a hierarchy for external users just so I can add the 2nd LDAP. It’s ugly but not unworkable. It’s also not our problem.

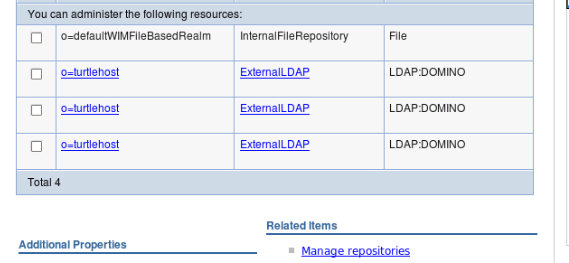

The problem is that once I add the second Domino server or even a third. My federated repositories in WebSphere look like this

Can you see what’s wrong? That table reads from the underlying wimconfig.xml file found under the Deployment Manager profile /config/cells/<cellname>/wim/config. That wimconfig.xml is fine which is why if I click on Manage Repositories they are all there. I just can’t edit them from this screen, I can only edit from the previous screen and that one links to the last LDAP entry I added.

So that’s part of our problem. It’s been there for a few years but since we could manually edit the wimconfig.xml to overwrite settings it was workable. This is caused by the “root” base entry on the first LDAP. That word “root” translates to an empty baseentries name= value in wimconfig.

Here’s the internal LDAP with baseEntries name=””

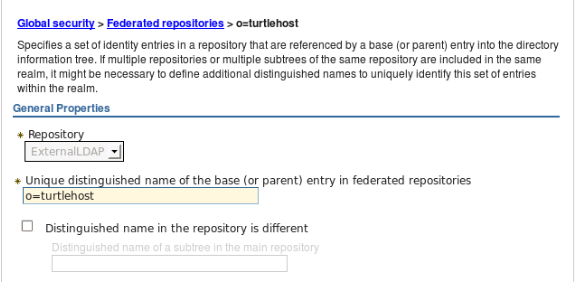

Here’s the external LDAP where I have defined a base entry of o=turtlehost

The additional side effect of this bug (and I’m not sure we can call it a WebSphere bug since expecting hierarchical LDAP is a fairly standard thing) is that in the latest version of WebSphere, it refused to search the second external directory. No error. Nothing. Just refused to search it which meant those users couldn’t login.

I edited wimconfig.xml and added a O=Turtle to replace the baseentries name=”” etc and that fixed both the WebSphere view and the ability of users to login.

So where does that leave us. Well it’s a problem because I want to use Domino. I don’t want to have to force a single hierarchy. C=xx doesn’t work anymore to trick WebSphere. “root” breaks both WebSphere and authentication. That means I can’t have a secondary Domino server for external users and still use a “root” base entry for the internal server. Without that “root” value, the flat Domino groups will be ignored.

That leaves me with a few options

1. Force a fake hierarchy on groups so I can have a base entry value that works and not use root

2. Use Directory Assistance and “root” but that allows external users to authenticate against my internal directory. I don’t like that

3. Use an LDAP attribute to separate external from internal users instead of a dedicated LDAP server. For security reasons i’m no fan of that either

4. Don’t use Domino for both LDAPs, only for one of them. One “root” defined Domino server will work fine