So ConnectED is over and the world has shifted a little bit more. Going into this year the work I had been doing since 1996 on Mail systems had dropped from about 70%+ of my tasks to about 20% and had been replaced with projects around security, SSO, Connections, Sametime and other related WebSphere / DB2 systems. Mostly that was because the use of mail systems has plateau’d and there is very little pushing at the boundaries going on so although everyone is still heavily dependent on mail , the systems pretty much ran themselves day to day. The most upheaval we had last year was related to security updates.

I like working with complex technologies so my work around Sametime, Connections and SAML continues but I’ve also learnt that there are huge gaps in understanding around the supporting systems like LDAP and database servers that customers are struggling with along with their own ability to maintain and manage the built systems once in place.

Then there’s cloud. As a system designer / installer / engineer / whatever – a move to the cloud in theory means I’m out of a job but I’ve never seen it like that. I do this because I love to deliver systems that make people’s lives easier and continue to learn and develop myself. An IBM’er said to me “I don’t see why you are happy we are doing this in the cloud , surely you’ll be out of a job?”. Leaving aside that I have no interest in holding customers back to maintain my own career, I wouldn’t get any sense of fulfilment from treading water.

I have projects spaced out across the year and I’m speaking at conferences hopefully in Belgium, Boston, Orlando (no not that one), Norway, Atlanta, UK etc. However it’s the beginning of the year and I’ve been told no-one contacts me because they think I’m flat out busy – just to be clear, I’m never too busy to take on work 🙂

So where does that leave me?

Waiting

We’re in a transitional stage with Verse which is yet to appear outside of a limited beta in the cloud and is at least a year away from on premises. What that will change is still to be seen and I’ll wait and see and decide where I land once I understand more of what it delivers both in the cloud and on premises and the architecture behind it. Other IBM products continue to add incremental features but nothing that would cause a seismic shift in my personal development strategy.

Teaching & Managing Supporting Technologies

The underlying technologies that these systems are dependent on are where many companies have gaps. Nothing is as important as well structured and reliable LDAP. LDAP directories are used for everything from authentication to data population , access rights and SSO. One of the things I want to focus on this year is giving customers a better grasp of LDAP and how to build and maintain the best system they can. Whether you are on premises or cloud, having a good directory is key to everything else you try to deploy.

DB2 and HADR. Many IBM products require a Database server and all of them pretty much support Db2, where SQL and Oracle are supported and used there is usually an in house database server team. However, many customers who come from a Domino background have very little DB2 experience because it’s never been needed and often what was the Domino team have to manage it. DB2 databases need maintenance in the same way Domino databases need them. The server may keep running but your performance is going to take a hit. I want to work this year on ensuring those customers who need DB2 systems have the right architecture and training to support it. Basically what I’ve done for years with Domino. You wouldn’t deploy Mail without understanding how to run a Domino server, and you shouldn’t deploy Connections without understanding how to run a DB2 server.

WebSphere is now pretty much aligned across products on 8.5x and it’s actually a fairly simple product to understand and manage. It’s just nothing like Domino. There are plenty of WebSphere courses out there but most of them cover 10% of what you need to work with Connections and Sametime (maybe 25% of Portal) and the rest is irrelevant to your day to day work. Along with doing lots of WebSphere only projects in the past 18 months I’ve also started doing WAS infrastructure design and workshop training for teams wanting to get up to speed with managing a WAS environment. I do the training via remote screen / web conference and it seems to work well as it has the advantage of me being able to use the customers own environment to train against. I’ll be continuing to work with WebSphere architecture specifically related to Connections and Sametime but also standalone.

Connections101

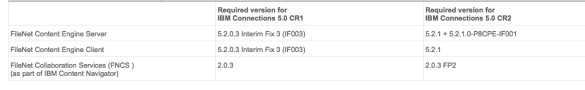

I’ve fallen behind on Connections101 since losing my fellow editor Paul but I have content now written for building Connections 5 on a Linux platform. Every time I think i’m done I decide to add a new piece like how to upgrade or add IBM Docs, but I’m going to go ahead and publish what I have in hand and add to it once the site is live. I’m also considering a Connections101 on deploying on iSeries. I just need to get my hands on an iSeries again (it’s been a few years since I owned one).

Are You Ready For Cloud?

With all the talk of Cloud and hybrid I believe many customers are at the stage of wondering if they should be moving and if they can move. I have no incentive to recommend or not recommend someone move but I do understand that what salesmen often don’t tell you or (to be fair) understand are the limitations of your existing business systems.

I am considering offering a provider agnostic cloud assessment to help you understand what your own technical barriers to cloud may be and whether a hybrid solution will ever be an option for you. If I am able to review systems and highlight what could move, what could possibly move if it’s changed and what can never move – I’m hoping it will help customers clear out the noise and be able to make a good strategic decision. I’d basically like to help people understand if a cloud deployment is a viable option for them now or in the future.

Domino

I’m still continuing to work with Domino and now most of my work is around healthchecks, consolidation, clustering, security and performance. It’s encouraging to see most customers upgrading Domino to newer versions, I see fewer and fewer EOL (v6, v7) versions out there and it’s still my favourite product to work with. I’m always delighted to get a new Domino project.

Sametime / Connections Chat

I’m doing a lot of work deploying the A/V elements of Sametime and designing global deployments. Once more it’s important that when the install is complete, the in house team are able to understand and manage the environment. Especially with the media elements in Sametime which are so dependent on each other and on their interconnectivity.

Summary

So if you’re interested in deploying anything WebSphere related, in DB2, in building and managing LDAP, in Single Sign On across multiple different systems, in workshop training or in understanding if and when you might consider a hybrid cloud strategy – that’s what I’m hoping to be working on and talking about this year. I foresee another shift towards the end of 2015 (or sooner if no-one is interested in those things :-))

What do you think?