Last week I changed to a new Macbook Pro 13in with touchbar. I had my doubts but it was the only model with the disk and RAM I needed. I planned to just ignore the features I didn’t think I’d use (especially anything touch related as I was fairly sure dirty or greasy fingers would render it useless).

Favourite things about my Mac week 1:

- Touch ID to login and access admin settings. I enabled multiple fingers and added some fingerprints for other people too. It does require a full password entry every 48hrs (I think) even if I don’t restart but I’m fine with that

- I enabled filevault which encrypted my entire disk. There were issues with earlier versions of filevault and using time machine so I had avoided it but the more recent versions (in the past 12 months or so) have been stable and there seems to be little latency on encrypting / decrypting. The main change is that now I have to login after boot to unlock the disk rather than login after the OS loads. It’s an almost unnoticeable change but I opted to also increase my password to a very lengthy phrase since there’s little point encrypting a disk with a flimsy password.

- USB C. I thought I’d hate the loss of my magsafe connector for power, the number of times I’ve tripped over my own cable and the magsafe popped off rather than drag the Mac to the ground. The new Mac has 4 USB C ports which can be used for anything including charging and I find being able to plug the power into any of 2 ports either side of my Mac is so much easier than being forced to plug it into one side and means I’m less likely to get tangled up in my own cables.

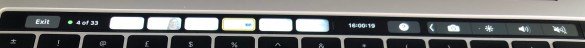

- Love my Touchbar – LOVE.IT.I know a lot of people hate it so clearly its appeal is closely tied to how people work. I’m very much a keyboard person, I prefer keyboard shortcuts to any mouse action for instance and with the Touchbar I can configure it to display what I find useful in each application. I have done that in some examples below and am completely addicted

Finder. I’ve added the “share” icon which allows me to Airdrop items (the touchbar changes to photos of people I can airdrop to) as well as quickview and delete., The best feature is that I can add the screenshot icon to my default touchbar. I screenshot all day and the key combination is hard to get working in a VM

Safari shows me all open tabls I can touch to move between them as well as opening a new tab and I added the history toggle because I go there all the time

The touchbar even works in Windows 10 running in a Parallels VM where I use the explorer icon all the time to open Windows explorer. I would get rid of Cortana but it’s in the default set

Keynote mode 1: When writing a presentation I can change the page size move through slides and indent / outdent

Keynote mode 2: when presenting I can see a timer and the upcoming slides I can touch to move backwards and fowards. I think I’m going to use this a lot

On the other hand I also bought a new iPad mini to replace my 4 year old iPad. I bought the mini because I didn’t want to go bigger with an iPad to a pro. My old iPad worked fine other than freezing in iBooks, being slow and restarting itself regularly. My new iPad restored from a backup of my old one exhibits the same behaviour. I think it’s going back.