By Tim Davis – Director of Development.

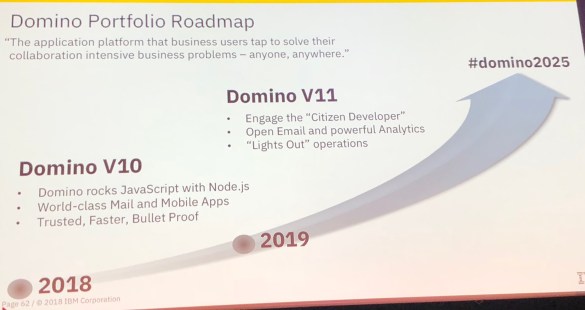

With Domino 10 bringing Node.js, and my experience of Javascript stacks over the past few years, I am very excited about the opportunities this brings for both building new apps and extending existing ones. I plan to talk about Node in future blogs, and I am giving an introduction to Node.js at MWLUG/CollabSphere 2018 in Ann Arbor, Michigan in July. However, I would like to digress from the main topic of Node and Domino itself, and talk a little about an awareness side effect that I am hoping this new feature will have, i.e. moving Domino into the Javascript full stack development space.

There are a plethora of NoSQL database products. In theory, Domino could always have been a NoSQL database layer, but there was no real reason for any Javascript stack developer to even consider it. It would never appear in any suggested lists or articles, and would require some work to provide an appropriate API.

The thing is, working in the Javascript stack world, I was made very aware that pretty much all the available NoSQL database products did not appear very sophisticated compared to Domino (or most other major Enterprise databases – Oracle, DB2, SAP, MS-SQL, etc). The emphasis seemed always on ease of use and very simple code capabilities and not much else.

Now in and of itself this is a worthy goal, but it doesn’t take long before you begin to notice the features that are missing. Especially when you compare them to Domino, which can now properly call itself a Javascript stack NoSQL database.

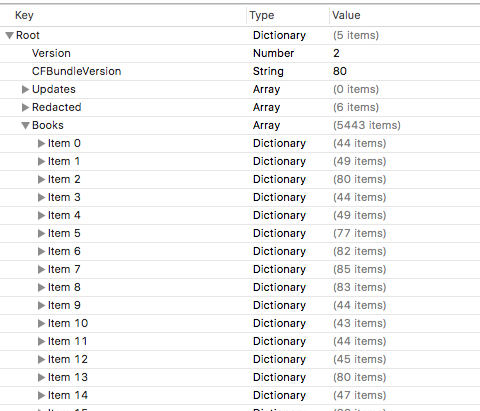

Popular NoSQL databases are MongoDb, Redis, Cassandra, and CouchDb. As with all of the NoSQL databases, each was built to solve a particular problem.

MongoDb is the one you have probably most likely heard of. It is the ‘M’ in the MEAN/MERN stacks. It is very good at scaling and ‘sharding’ which is sharing workload across many servers. It also has a basic replication model for redundancy.

Redis is an open source database whose power is speed. It holds its data in RAM which is super-fast but not so scalable.

Cassandra came from Facebook, and is a kind of mix of table data and NoSQL and is good for very large volumes of data such as IoT stuff.

CouchDb was originally developed by Damian Katz from Lotus and its key feature is replication including to local devices, making it good for mobile/offline solutions. It also has built-in document versioning which improves reliability but can result in large storage requirements.

Each product has its own flavour and would be suited to different applications but there are many key features which Domino provides, that we are used to being able to utilise, and while some of these products may have similar features, none of them have a proper equivalent for all.

Read and Edit Access: Domino has incredibly sophisticated read and edit control, to individual documents and even down to field level. You can provide access through names, groups and roles, and all of this is built-in. In the other products, anything like this has to be pretty much obfuscated by specifying filters in queries. You are effectively rolling your own security. In Domino, if you are a user not in the reader field then you can’t read the document, no matter how you try to access it.

Replication and Clustering: One of Domino’s main strengths has always been its replication and clustering model. Its power and versatility is still unsurpassed. There are some solutions such as MongoDb and CouchDb which have their own replication features and these do provide valuable resilience and/or offline potential but Domino has the most fine control and distributed data capabilities.

Encryption: Domino really does encryption well. Most other NoSQL products do not have any. Some have upgrades or add-on products that provide encryption services to some degree, but certainly none have document-level or field-level encryption. You would have to write your own code to encrypt and decrypt the individual data elements.

Full Text Indexing: Some of the other products such as MongoDb do have a full text index feature, but these tend to be somewhat limited in their search syntax. You can use add-ons which provide good search solutions, such as Solr or Elasticsearch, but Domino has indexing built-in and those indexing solutions themselves have little security.

Other Built-in Services: Domino is not just a database engine. It has other services that extend its reach. It has a mail server, it has an agent manager, it has LDAP, it has DAOS. With the other products you would need to provide your own solution for each of these.

Historically, a big advantage for the other datastores was scalability, but with Domino 10 now supporting databases up to 256Gb this becomes less of an issue.

In general, all the other NoSQL products do have the same main advantage, the one which gave rise to their popularity in the first place, and this is ease of use and implementation. In most cases a developer can spin up a NoSQL database without needing the help of an admin. Putting aside the issue of whether this is actually a good idea for enterprise solutions, with containerization Domino can now be installed just as easily.

I hope this brief overview of the NoSQL world has been helpful. I believe Domino 10 will have a strong offering in a fast growing and popular development space. My dream is that at some point, Domino becomes known as a full stack datastore, and because of its full and well-rounded feature set, new developers in startups looking for database solutions will choose it, and CIOs in large enterpises with established Domino app suites will approve further investment in the platform.